22. Long Polling Chat, POST Reading. Pt 1.

Hey all! The aim of our lesson for today is to learn how to create a Node.js chat. Our first chat will be rather simple. Every person following this url

http://localhost:3000/

will automatically enter the room, where it will get messages from another user in the same room. In our case, chatting can exist from different browsers. We will make the chat originally with no users, database or authorization.

First, let us see, how everything is arranged here.

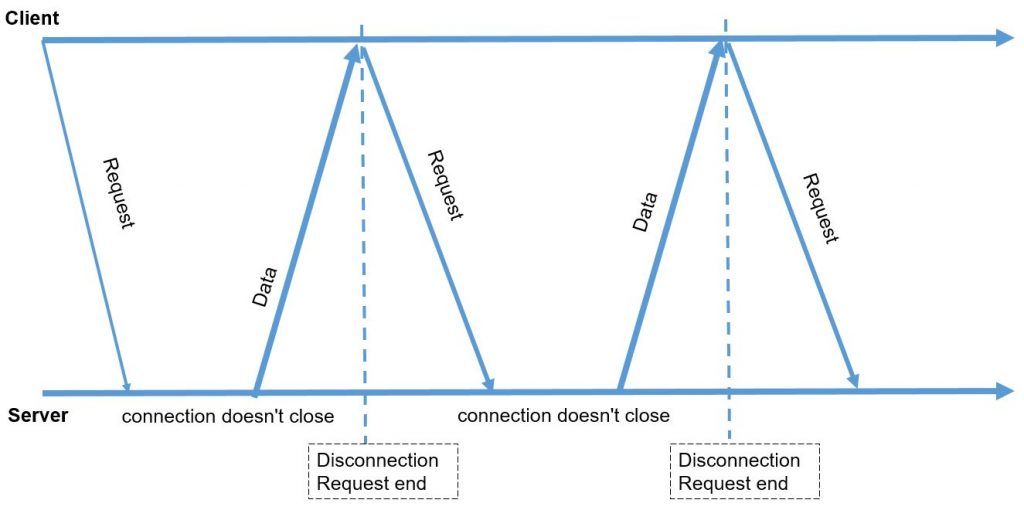

The server chatting algorithm is called long-polling.

It is very simple, on the one hand, and is perfect for 90% of tasks, when you need to communicate with the server, on the other hand. Take a more precise look at it. When a client wants to receive data from a server, a general request gets sent to the server XMLhttpRequest. The most extraordinary thing here will be the way how a server handles it. The server, upon receiving such request, won’t respond immediately and will leave the request hung up. Later, whenever any data for a client appears, the server will respond on this request. The client will receive a response message, handle and output it and will make a new request to the server. The latter will wait, if it has no data. As soon as it receives any data, it will immediately respond. In fact, it seems like a client tries to keep a working connection to the server to receive the data, once they are ready to be transmitted.

The respective code at the client’s side looks as follows, so let us create index.html:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 | <!DOCTYPE html> <html> <head> <meta charset="utf-8"> <link href="//netdna.bootstrapcdn.com/twitter-bootstrap/2.3.2/css/bootstrap-combined.min.css" rel="stylesheet"> </head> <body class="container"> <p class="lead">Welcome to our chat!</p> <form id="publish" class="form-inline"> <input type="text" name="message"/> <input type="submit" class="btn btn-primary" value="Send"/> </form> <ul id="messages"></ul> <script> publish.onsubmit = function() { var xhr = new XMLHttpRequest(); xhr.open("POST", "/publish", true); xhr.send(JSON.stringify({message: this.elements.message.value})); this.elements.message.value = ''; return false; }; subscribe(); function subscribe() { var xhr = new XMLHttpRequest(); xhr.open("GET", "/subscribe", true); xhr.onload = function () { var li = document.createElement('li'); li.textContent = this.responseText; messages.appendChild(li); subscribe(); }; xhr.openerror = xhr.onabort = function () { setTimeout(subscribe, 500) }; xhr.send(''); } </script> </body> </html> |

There is a form to send messages and there is a list of messages, where they come. With a submit-form a XMLhttpRequest is created, and the messages get published in a general order at the server. And to get new messages, the long-polling algorithm described above is used. There is a function subscribe that launches XMLhttpRequest and says: accept data from this url. Whenever the server response is received, it will be shown as a message and will call the function subscribe again, which means a new request is made. And this process has a cyclic nature. The only exception is when an error occurs or something goes wrong. In this case, we will send subscribe once again, but with a little pause in order not to “damage” the server.

Pay your attention that the code turns to life the long-polling algorithm. It is not bound to any specific chat – it is just a subscription code to the server’s messages. It can be extended or added with various channels of messages receiving, etc. Right now we won’t do anything of these and will move to Node.js. You can see a little template for the server part in a form of an http server that can return index.html as a main page (let us create server.js and add the respective code):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | var http = require('http'); var fs = require('fs'); http.createServer(function(req, res) { switch (req.url) { case '/': sendFile("index.html", res); break; case '/subscribe': //.. break; case '/publish': //.. break; default: res.statusCode = 404; res.end("Not found"); } }).listen(3000); function sendFile(fileName, res) { var fileStream = fs.createReadStream(fileName); fileStream .on('error', function () { res.statusCode = 500; res.end("Server error"); }) .pipe(res) .on('close', function () { fileStream.destroy(); }); } |

Also there will be two url: case '/subscribe' for subscribing to messages and case '/publish' for sending them. They are exactly the same as you’ve seen in index.html . We will start from subscription.

The subscribe function from the page index.html will send long requests directly to url subscribe. A client that has sent a request to subscribe, on the one hand, should not receive a response right now, but on the other hand, we should remember it has requested the data in order to send them to it whenever we receive them. To solve this task, let us create a special object called chat. chat.subscribe It will remember a client has come. To do so, we will deliver the objects req and res to it.

While chat.publish will send messages to all clients existing at the moment:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | http.createServer(function(req, res) { switch (req.url) { case '/': sendFile("index.html", res); break; case '/subscribe': chat.subscribe(req, res); //.. break; case '/publish': chat.publish('....'); //.. break; default: res.statusCode = 404; res.end("Not found"); } |

We will describe this object in the chat within a separate module that will be placed in a current directory. Let us add a record to server.js:

1 | var chat = require('./chat'); |

Create chat.js and add the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | var clients = []; exports.subscribe = function(req, res) { console.log("subscribe"); clients.push(res); }; exports.publish = function(message) { console.log("publish '%s'", message); clients.forEach(function(res) { res.end(message); }); clients = []; }; |

The module will store the current connection in the clients array.

Upon the subscribe command it will add a new object res to this array. We’ll draw your attention to the fact the req objects are not added anywhere. Further we won’t need it and will just send messages to this client, so res will be enough. So, whenever a request at url subscribe happens, the respective object res will be just added to the clients array. We do no more actions with the connection, that’s why from a client’s side it may look like a request has been hung up and hasn’t received any response. Next, at some point we will receive a message and call exports.publish. And this method must send messages to all subscribed clients. To do so, we create a array cycle and send this response to every client:

1 2 3 | clients.forEach(function(res) { res.end(message); }); |

res.end(message)immediately closes the connection and we clean the clients array because all connections there have already been closed and we do not need them anymore. So, let us check the code. Launch and enter your browser at the chat’s url . Then type something. We see various messages come, but not that one we’ve sent because right now we’ve got a stub in a publish method at the server:

1 | chat.publish("..."); |

The lesson materials were borrowed from the following screencast.

We are looking forward to meeting you on our website soshace.com

No comments yet