NLP Preprocessing using Spacy

Part-of-speech (POS) tagging

Introduction

Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human languages.

Importance of NLP preprocessing

One crucial aspect of NLP is preprocessing, which entails cleaning and structuring raw text data to improve the performance and accuracy of subsequent NLP tasks. By eliminating irrelevant information and normalizing the text, preprocessing ensures that NLP models can efficiently process and analyze the data. I have worked on a number of NLP projects and after collecting the data the biggest challenge is the pre-processing. Since the text data available on the internet is often highly unstructured, contains unwanted symbols, repeated characters, contains different forms of the same root word, etc. It is therefore crucial to preprocess the text before feeding it to my learning algorithm to maintain its performance.

Introduction to Spacy as a powerful NLP library

Spacy is a powerful, open-source NLP library designed to handle various preprocessing tasks with high efficiency and speed. Developed in Python, Spacy provides a wide range of functionalities, such as tokenization, lemmatization, part-of-speech tagging, and named entity recognition, to mention a few. Due to its simple yet comprehensive API and well-optimized performance, Spacy has gained popularity among researchers and industry professionals alike.

Benefits of using Spacy for preprocessing tasks

Using Spacy for NLP preprocessing offers several benefits. First an foremost thing that I totally love about Spacy is its ease of use and straightforward API that enable users to quickly set up and execute preprocessing tasks. Second, Spacy’s pre-trained models and customizable pipelines allow for tailored solutions that cater to specific NLP requirements. Finally, its robustness and scalability make Spacy an ideal choice for handling large-scale text data, ensuring efficient and accurate results. By leveraging Spacy’s capabilities, users can significantly enhance the quality and performance of their NLP tasks. These qualities make Spacy my favorite text preprocessing library.

Installing and setting up Spacy

Installing Spacy via pip or conda

Installing and setting up Spacy is a straightforward process. To install Spacy, use either pip or conda package managers. I can run the following command in my terminal or command prompt to install Spacy using pip.

1 | pip install spacy |

If I need to install Spacy using conda, I shall run the following command.

1 | conda install -c conda-forge spacy |

Downloading language models

Once installed, I can easily download the desired language models with the following command.

1 | python -m spacy download [model_name] |

where [model_name] is the model’s specific identifier (e.g., if I want to download the English small model I shall use ‘en_core_web_sm’).

Importing Spacy and loading models in Python

In my Python script, I used the following command to import Spacy.

1 | import spacy |

To load the language model, I am going to insert the following command,

1 | nlp = spacy.load('[model_name]') |

replacing [model_name] with the identifier of the downloaded model. With Spacy and the language model loaded, I can now start preprocessing my text data.

Tokenization

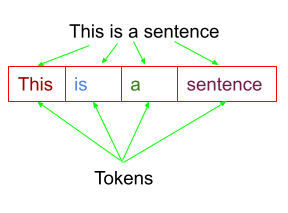

Definition and purpose of tokenization

Tokenization is a fundamental preprocessing step in Natural Language Processing (NLP) that involves breaking down a given text into smaller units, typically words, phrases, or sentences. These smaller units, called tokens, help in the analysis, understanding, and processing of the text. Tokenization is essential for tasks such as text classification, sentiment analysis, and machine translation, as it allows models to identify the words or phrases’ structure and meaning.

Sentence split into tokens

Spacy’s tokenization process

Spacy’s tokenization process relies on a combination of rules, statistical models, and custom user-defined settings. The library’s tokenizer begins by splitting the text on whitespace and punctuation marks, and then proceeds to segment the text further using its statistical model. This model considers factors like prefixes, suffixes, and infixes to ensure accurate tokenization, even for words with hyphens or contractions. Spacy’s tokenizer is also capable of handling special cases, such as URLs, emails, and emoticons, through custom rules, one of the reasons why Spacy takes lead.

Code examples for tokenization using Spacy

I have written the following code snippet to tokenize a sentence using Spacy:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "Spacy is an open-source NLP library designed for various preprocessing tasks." # Tokenize the text using the loaded model doc = nlp(text) # Print individual tokens for token in doc: print(token.text) |

In this example, the text is tokenized using the nlp() function, which applies the loaded language model to the input text. The returned doc object contains a sequence of tokens, which can be accessed and printed in a loop.

Output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | Spacy is an open - source NLP library designed for various preprocessing tasks . |

Spacy’s tokenization process is efficient, accurate, and easily customizable, making it an excellent choice for preprocessing text in a wide variety of NLP tasks.

Stop words removal

Definition and importance of stop words

Stop words are common words that carry little or no significant meaning in text analysis, such as “and,” “the,” “is,” and “in.” Removing stop words from text data during preprocessing can help reduce noise and improve computational efficiency for various NLP tasks. By eliminating these high-frequency, low-value words, models can focus on more relevant words and phrases, resulting in better performance and more accurate results.

Identifying stop words using Spacy

Spacy provides a pre-defined list of stop words for several languages, allowing users to easily identify and remove them from their text data. The library’s stop word list can be customized by adding or removing words as needed, catering to specific requirements or domain-specific vocabularies. Thus, I can harness the power of large pre-define lists as well as tweak them as per my needs. Isn’t that awesome!!

Code examples for stop words removal

My code snippet given below removes the stop words from the sentence.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "Spacy is an open-source NLP library designed for various preprocessing tasks." # Tokenize the text using the loaded model doc = nlp(text) # Remove stop words from the tokenized text filtered_tokens = [token.text for token in doc if not token.is_stop] # Print filtered tokens print(filtered_tokens) |

In this example, the text is first tokenized using the nlp() function. Then, a list comprehension is used to filter out the stop words by checking the token.is_stop attribute for each token in the doc object. The resulting filtered_tokens list contains only the relevant words from the input text.

Output:

1 | ['Spacy', 'open', '-', 'source', 'NLP', 'library', 'designed', 'preprocessing', 'tasks', '.'] |

Stop words removal is an essential step in NLP preprocessing, enabling models to focus on meaningful words and phrases. Spacy’s built-in stop words list and easy-to-use API make it a valuable tool for enhancing text data quality and improving NLP task performance.

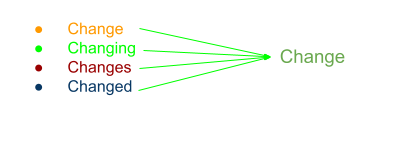

Lemmatization

Definition and importance of lemmatization

Lemmatization is a crucial preprocessing step in NLP that involves converting words to their base or dictionary form, known as the lemma. This process helps reduce inflectional and derivational variations of words, such as different verb forms, plurals, or comparative forms, enabling models to identify and analyze words based on their core meaning. By normalizing words, lemmatization improves the performance and accuracy of various NLP tasks, such as text classification, sentiment analysis, and information retrieval.

Changing -> Change

Spacy’s lemmatization approach

Spacy’s lemmatization process relies on its pre-trained statistical models and underlying linguistic data, such as part-of-speech (POS) tags and morphological features. The library uses a rule-based approach, which combines POS tags with lookup tables and morphological rules to determine the correct lemma for a given word. This approach enables Spacy to accurately lemmatize words across different languages and handle irregular forms or exceptions.

Code examples for lemmatization using Spacy

You can follow the below code snippet that I have used to perform the lemmatization of the tokens in the sentence.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "The quick brown foxes are jumping over the lazy dogs." # Tokenize and lemmatize the text using the loaded model doc = nlp(text) # Extract lemmas from the tokenized text lemmas = [token.lemma_ for token in doc] # Print lemmatized tokens print(lemmas) |

In this example, the text is tokenized using the nlp() function, which also applies POS tagging and other linguistic features necessary for lemmatization. The lemmatized tokens are then extracted using a list comprehension that iterates over the doc object and accesses the token.lemma_ attribute for each token. The resulting lemmas list contains the base forms of the words from the input text.

Output:

1 | ['the', 'quick', 'brown', 'fox', 'be', 'jump', 'over', 'the', 'lazy', 'dog', '.'] |

Lemmatization is an essential aspect of NLP preprocessing, and Spacy’s robust and accurate approach makes it an excellent choice for normalizing text data in various NLP tasks.

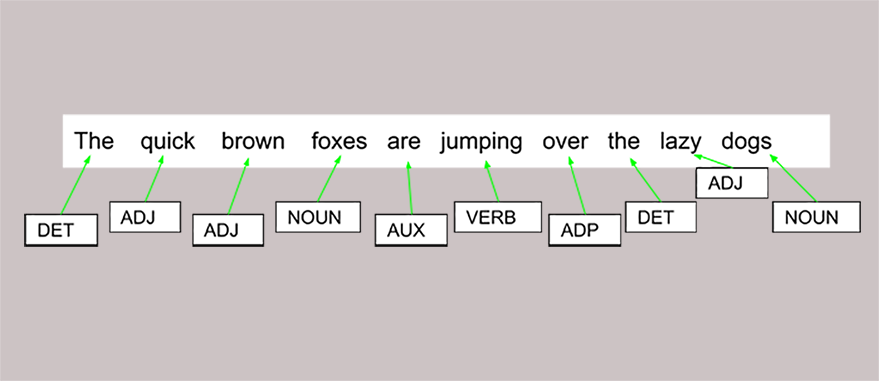

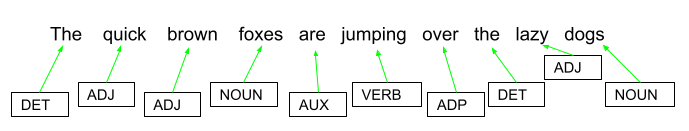

Part-of-speech (POS) tagging

Definition and use cases of POS tagging

Part-of-speech (POS) tagging is the process of assigning grammatical categories, such as nouns, verbs, adjectives, and adverbs, to individual words in a text. This information is valuable for understanding the syntactic structure and semantic relationships within the text. POS tagging is essential for various NLP tasks, including parsing, named entity recognition, sentiment analysis, and text summarization. By incorporating grammatical information, models can better analyze and interpret the meaning of words and phrases in context.

Part-of-speech (POS) tagging

Spacy’s POS tagging capabilities

Spacy’s POS tagging relies on pre-trained statistical models and linguistic features to accurately assign POS tags to words. The library’s models are trained on extensive annotated data, enabling them to handle a wide range of languages and text styles. Spacy’s POS tagger also benefits from its integration with the processing pipeline, which provides additional linguistic information, such as tokenization and dependency parsing, to enhance the tagging accuracy.

Code examples for POS tagging using Spacy

The code given below generates the POS tags for each word in the sentence. While NLTK library needs you to write much larger code, Spacy does the same with a one liner.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "The quick brown foxes are jumping over the lazy dogs." # Tokenize and POS-tag the text using the loaded model doc = nlp(text) # Extract and print POS tags for each token for token in doc: print(f"{token.text}: {token.pos_}") |

In this example, the text is first tokenized and processed using the nlp() function, which applies POS tagging along with other linguistic features. The resulting doc object contains tokens with their associated POS tags, which can be accessed using the token.pos_ attribute. By iterating through the doc object, you can print the tokens and their corresponding POS tags.

Output:

1 2 3 4 5 6 7 8 9 10 11 | The: DET quick: ADJ brown: ADJ foxes: NOUN are: AUX jumping: VERB over: ADP the: DET lazy: ADJ dogs: NOUN .: PUNCT |

Spacy’s efficient and accurate POS tagging capabilities make it a valuable tool for preprocessing and analyzing text data in a wide range of NLP tasks.

Named Entity Recognition (NER)

Definition and importance of NER

Named Entity Recognition (NER) is an NLP task that involves identifying and classifying entities, such as names of people, organizations, locations, dates, and monetary values, within a text. By extracting these entities, NER helps uncover the underlying meaning and context of the text, enabling further analysis and information extraction. NER is essential for various applications, including information retrieval, sentiment analysis, question-answering systems, and knowledge graph construction.

Spacy’s NER capabilities and pre-trained models

Spacy offers robust NER capabilities using pre-trained statistical models and deep learning techniques. These models are trained on extensive annotated data, allowing them to recognize a wide range of entity types across different languages and domains. Spacy’s NER models can be easily integrated with its processing pipeline, providing a comprehensive solution for text analysis. In addition to the pre-trained models, Spacy enables users to train custom NER models and fine-tune them for specific use cases or domain-specific entities.

Code examples for NER using Spacy

Spacy gives an elegant way to perform the Named Entity Recognition. You can follow the code given below for NER using Spacy.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "Apple Inc. is an American multinational technology company headquartered in Cupertino, California." # Perform NER using the loaded model doc = nlp(text) # Extract and print named entities and their types for ent in doc.ents: print(f"{ent.text}: {ent.label_}") |

In this example, the text is processed using the nlp() function, which applies NER along with other linguistic features. The resulting doc object contains the named entities and their corresponding entity types, which can be accessed through the doc.ents attribute. By iterating through the doc.ents object, you can print the named entities and their classifications.

Output:

1 2 3 4 | Apple Inc.: ORG American: NORP Cupertino: GPE California: GPE |

Spacy’s powerful NER capabilities and pre-trained models make it an excellent choice for identifying and classifying named entities in various NLP tasks and applications.

Dependency parsing

Definition and purpose of dependency parsing

Dependency parsing is an NLP task that involves identifying the grammatical relationships between words in a sentence, represented as a directed graph where nodes correspond to words, and edges correspond to the dependency relationships between them. This process helps uncover the syntactic structure of a sentence and reveals how words interact to convey meaning. Dependency parsing is essential for various NLP applications, such as machine translation, information extraction, question-answering systems, and sentiment analysis.

Spacy’s dependency parsing capabilities

Spacy offers a fast and accurate dependency parsing algorithm based on pre-trained statistical models and neural networks. These models are trained on extensive annotated data and can handle a wide range of languages and text styles. Spacy’s dependency parser is fully integrated with its processing pipeline, which allows users to leverage other linguistic features, such as POS tagging and NER, to enhance the parsing accuracy.

Code examples for dependency parsing using Spacy

I have written a sample code to generate the dependency parsing using Spacy. You shall be surprised by the elegant and simple handling of dependencies by Spacy. The code is just a one liner!!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | import spacy # Load the language model nlp = spacy.load("en_core_web_sm") # Example text text = "The quick brown fox jumps over the lazy dog." # Perform dependency parsing using the loaded model doc = nlp(text) # Extract and print tokens, dependency labels, and head tokens for token in doc: print(f"{token.text}: {token.dep_} -> {token.head.text}") |

In this example, the text is processed using the nlp() function, which applies dependency parsing along with other linguistic features. The resulting doc object contains tokens with their associated dependency labels and head tokens, which can be accessed using the token.dep_ and token.head.text attributes, respectively. By iterating through the doc object, you can print the tokens, their dependency labels, and the head tokens representing the dependency relationships.

Output:

1 2 3 4 5 6 7 8 9 10 | The: det -> fox quick: amod -> fox brown: amod -> fox fox: nsubj -> jumps jumps: ROOT -> jumps over: prep -> jumps the: det -> dog lazy: amod -> dog dog: pobj -> over .: punct -> jumps |

Spacy’s efficient and accurate dependency parsing capabilities make it a valuable tool for uncovering the syntactic structure and relationships in text data across various NLP tasks and applications.

Customizing Spacy’s pipeline

Overview of Spacy’s processing pipeline

Spacy’s processing pipeline is a sequence of operations applied to text data in order to extract linguistic features and analyze the text. The default pipeline typically includes components such as tokenization, POS tagging, dependency parsing, NER, and text classification. These components are applied in a specific order to ensure accurate processing and feature extraction. Spacy’s pipeline is highly customizable, allowing users to add, modify, or disable components as needed, catering to specific requirements or domain-specific tasks. Adding to already vast number of capabilities, Spacy allows me to handle specific scenarios by customizing its pipeline.

Adding, modifying, and disabling pipeline components

Spacy provides an easy-to-use API for customizing the processing pipeline. Users can add new components or custom functions to the pipeline, modify existing components, or disable components that are not required for their specific use case.

- Adding a custom component: Users can create custom functions and add them to the pipeline using the

nlp.add_pipe()method. The custom function should accept a Doc object and return it after processing. - Modifying a component: Users can modify the behavior of existing components, such as adding new rules to the tokenizer or customizing the list of stop words.

- Disabling a component: Users can disable unnecessary components in the pipeline, which can improve processing speed and efficiency. This can be done using the

nlp.disable_pipes()method or specifying the exclude parameter when calling thenlp()function.

Code examples for customizing the pipeline

I have written the sample code to customize my Spacy pipeline given below. You can implement any processing logic in the custom component of the pipeline.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | import spacy from spacy.language import Language @Language.component('custom_component') def custom_component(doc): # Custom processing logic return doc # Load the language model nlp = spacy.load("en_core_web_sm") # Add a custom component to the pipeline nlp.add_pipe('custom_component', last=True) # Disable the named entity recognizer in the pipeline with nlp.disable_pipes("ner"): # Process the text with the customized pipeline text = "The quick brown fox jumps over the lazy dog." doc = nlp(text) # Print tokens, POS tags, and dependency labels for token in doc: print(f"{token.text}: {token.pos_}, {token.dep_}") |

In this example, a custom component is added to the pipeline using the nlp.add_pipe() method. The NER component is disabled using the nlp.disable_pipes() method, and the text is processed with the customized pipeline. The resulting doc object contains tokens with their associated POS tags and dependency labels, which can be printed by iterating through the doc object.

Output:

1 2 3 4 5 6 7 8 9 10 | The: DET, det quick: ADJ, amod brown: ADJ, amod fox: NOUN, nsubj jumps: VERB, ROOT over: ADP, prep the: DET, det lazy: ADJ, amod dog: NOUN, pobj .: PUNCT, punct |

Customizing Spacy’s pipeline allows users to tailor the library’s capabilities to their specific needs, enhancing the performance and effectiveness of their NLP tasks and applications.

Conclusion

In this article, we explored the powerful NLP preprocessing capabilities of Spacy, a popular open-source library designed for various natural language processing tasks. We covered essential preprocessing techniques such as tokenization, stop words removal, lemmatization, part-of-speech tagging, named entity recognition, and dependency parsing. Additionally, we discussed customizing Spacy’s pipeline to cater to specific requirements or domain-specific tasks.

Effective preprocessing is crucial for accurate and efficient NLP tasks, as it helps reduce noise, uncover the syntactic structure, and reveal semantic relationships within the text. By leveraging Spacy’s robust functionality and pre-trained models, users can streamline the preprocessing process, enabling their models to focus on meaningful words and phrases, ultimately resulting in better performance and more accurate results.

Spacy’s comprehensive set of features and tools make it an excellent choice for various NLP applications. We encourage you to further explore Spacy’s capabilities, experiment with its customization options, and leverage its advanced functionalities to enhance your text analysis and natural language processing projects.

You can find the code from this article here.

Sources

- “Text Preprocessing in Python using spaCy library”, https://iq.opengenus.org/text-preprocessing-in-spacy/

- “Text Analysis with Spacy to Master NLP Techniques”, https://www.analyticsvidhya.com/blog/2021/06/text-analysis-with-spacy-to-master-nlp-techniques/

- “Natural Language Processing With spaCy in Python”, https://realpython.com/natural-language-processing-spacy-python/

- “Text preprocessing using Spacy”, https://www.kaggle.com/code/tanejapranav/text-preprocessing-using-spacy

- “Language Processing Pipelines”, https://spacy.io/usage/processing-pipelines

No comments yet