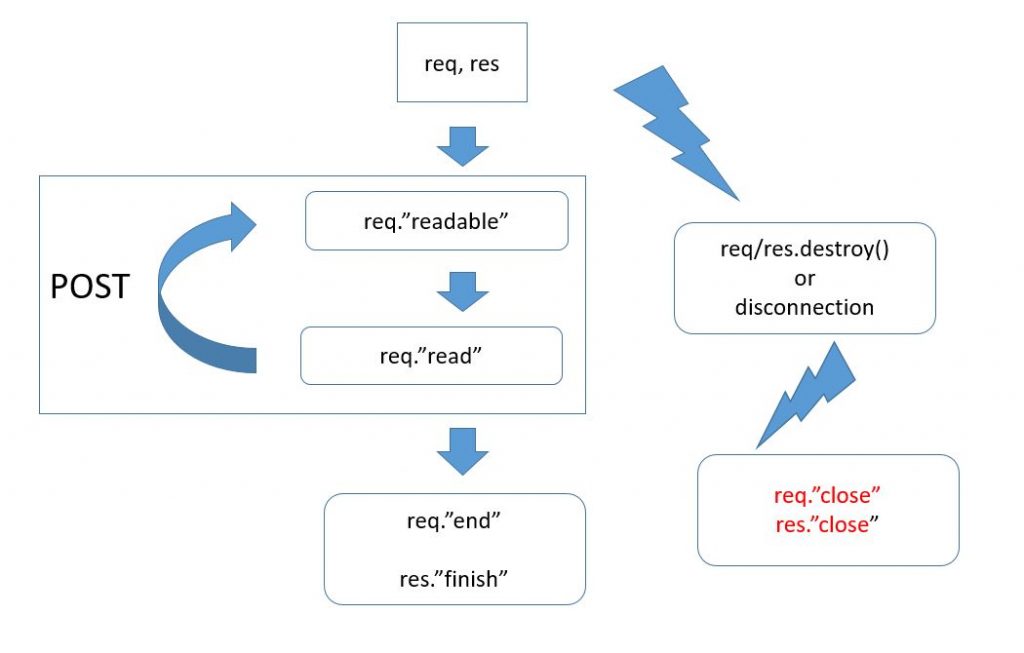

So, whatever we type, we see the same message to be sent. Let us fix it. We send messages with a POST method. In order to read this method from req, we need to work with it as with a stream. So, let us look at the following scheme that describes a request’s lifecycle, in particular of the req and res objects.

We’ll need this information later. So, we’ve received a request. If this is a get request, such requests do not have a body. They’ve got only titles: url and other browser ones, which are fully sent and handled immediately. If it is a POST method, it contains a body that needs to be read: work with req as with a stream. In our case, we’ve used this method to send messages because get, as we’ve mentioned earlier, cannot be used to change data. It is good only for getting them. So, we take req and hang needed handlers on it. That’s how it can look like (server.js):

http.createServer(function(req, res) {

switch (req.url) {

case '/':

sendFile("index.html", res);

break;

case '/subscribe':

chat.subscribe(req, res);

break;

case '/publish':

var body = '';

req

.on('readable', function() {

var chunk = req.read();

if (chunk !== null) {

body += chunk;

}

})

.on('end', function() {

body = JSON.parse(body);

chat.publish(body.message);

res.end("ok");

});

break;

default:

res.statusCode = 404;

res.end("Not found");

}

}).listen(3000);

When receiving another data package we add it to a time variable, and when the data are fully received, we process them as JSON, followed by their publication in a chat body.message. This command will send the message to all of those who’ve already subscribed to subscribe. But it will do nothing to a current request, that’s why a current request that the POST has been sent through, needs to be completed by, for example, specifying that everything has gone well.

res.end("ok")

Such code will generally work.

From the other side, if we write it, we suppose our clients are almost saints, they always send a valid JSON, they never want to do something bad, which is surely wrong. Clients may be different. So, let us see what we can change here. The first thing is JSON acceptance for if it is invalid, the command JSON.parse(body) will show an exception and it will crush the whole process.

So, let us try to catch it. If an error occurred, we respond to a client: we’re sorry, the request you’ve sent, is incorrect. Add it to our case:

case '/publish':

var body = '';

req

.on('readable', function() {

var chunk = req.read();

if (chunk !== null) {

body += chunk;

}

})

.on('end', function() {

try {

body = JSON.parse(body);

} catch (e) {

res.statusCode = 400;

res.end("Bad Request");

return;

}

chat.publish(body.message);

res.end("ok");

});

break;

That is exactly code 400, and you need to end your conversation to them. Another safety problem urgent for us is our work with the memory. If a client is too angry or, on the contrary, too generous and wants to send us all of his hard disk content, all the data he will send will endlessly get stored within the variable body and will soon overload all of the memory space causing the server crash. So, in order to avoid it, let us add a little check to our case '/publish' :

case '/publish':

var body = '';

req

.on('readable', function() {

var chunk = req.read();

if (chunk !== null) {

body += chunk;

}

console.log(body.length);

if (body.length > 1000) {

res.statusCode = 413;

res.end("Your message is too big for my little chat");

}

})

.on('end', function() {

if (res.statusCode === 413) return;

try {

body = JSON.parse(body);

} catch (e) {

res.statusCode = 400;

res.end("Bad Request");

return;

}

chat.publish(body.message);

res.end("ok");

});

break;

If following the certain package a body length is too long, it means 413 – the request body is too big and we also quit communicating with this client. So, let us launch our server and check the chat. It is better to do in two different browsers for your better understanding.

Well, our chat is ready, but there is one little detail left: so, what happens, if a client ends the connection? Let us look once again at the chat module. Here we can see that incoming clients are added to the array. None of them does not get removed from the array. Respectively, if a client suddenly closes a browser and thus ends the connection, we will see an extra unnecessary connection in this array that has already been closed. On the one hand, it may be quite normal because the connection recording does not bring any downsides – it will mean just nothing. On the other hand, why do we need an extra connection? Fortunately, we can easily catch the moment of closure for this connection:

exports.subscribe = function(req, res) {

console.log("subscribe");

clients.push(res);

res.on('close', function() {

clients.splice(clients.indexOf(res), 1);

});

};

This event works only if the connection is closed up to its ending by a server, which means either we call res.end, or, if we don’t do this, the connection just cuts off or brings destroy, and it brings close to the event. We remove the connection from the array. This operation can be more effective, if we use object instead of array. But everything will work with the array, too. To make sure, let us do a setInterval that will output the length of a current clients array every 2 seconds:

setInterval(function() {

console.log(clients.length);

}, 2000);

Launch our code in browsers for checking whether the chat works. It does. So, update the page: the current connection will be closed, and a new one will be opened. Update it. As you can see, there are still 2 active connections.

You will find the lesson code for downloading in our repository.

The lesson materials were borrowed from the following screencast.

We are looking forward to meeting you on our website soshace.com