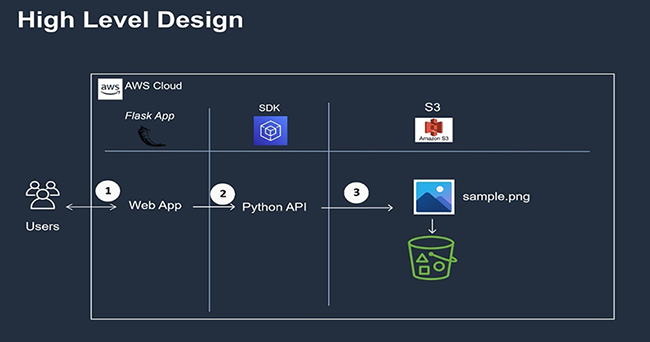

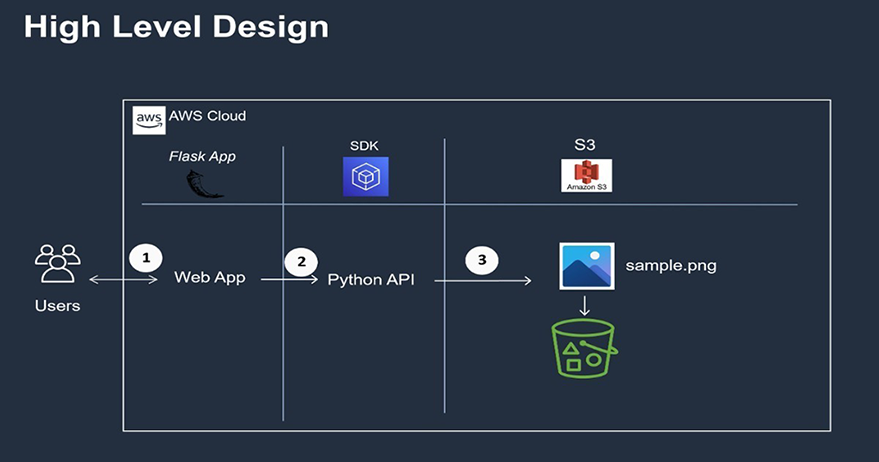

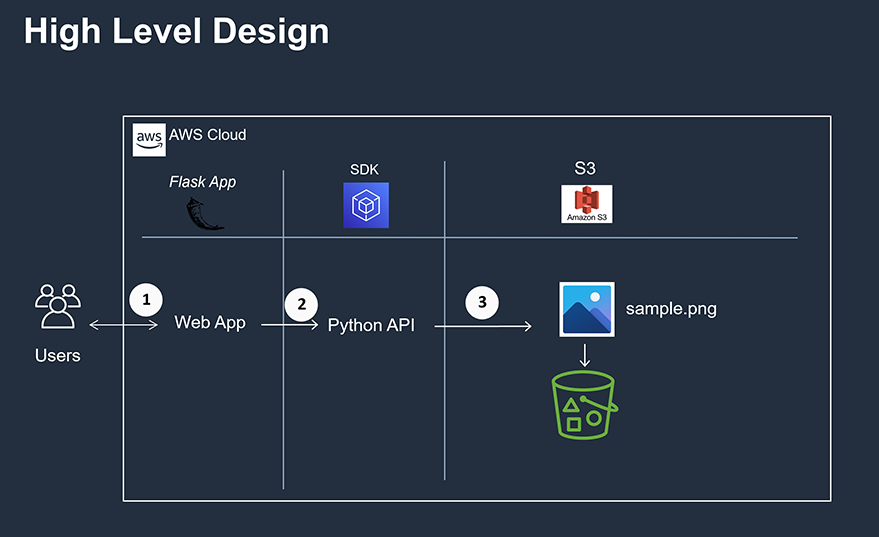

This article is aimed at developers who are interested to upload small files to Amazon S3 using Flask Forms. In the following tutorial, I will start with an overview of the Amazon S3 followed by the python Boto3 code to manage file operations on the S3 bucket and finally integrate the code with Flask Form.

1) Application Overview

As a web developer, it is a common requirement to have the functionality of uploading the files to a database or to a server for further processing. Cloud computing brings the concept of treating the infrastructure as not hardware rather as software enabling the web developers with limited knowledge of infrastructure/hardware to take full advantage of the services.

Amazon is the most popular choice of cloud computing and Python became the go-to programming language for any cloud computing.

Objectives of this tutorial:

By the end of this tutorial, you will be able to:

- Create an S3 bucket with Python SDK.

- Enable bucket versioning to keep a track of file versions.

- Upload small files to S3 with Python SDK.

- Create a Flask Form to allow a certain type of files to upload to S3.

Alright, let us start with an introduction to Amazon S3.

2) Introduction To Amazon S3

Amazon Simple Storage Service (in short S3) provides secure and highly scalable object storage which is very easy to use as it has a very simple web service interface to store and retrieve any amount of data. The main advantage of using S3 to store objects (in our case small files) is to access them anytime anywhere from the web rather than logging into a database or an application server to access a file.

With AWS SDK we can integrate S3 with other AWS services and external Flask applications as well.

The term “files” and “objects” are pretty much the same when dealing with AWS S3 as it refers to all the files as objects. Let us understand the basic components of S3 before go any further.

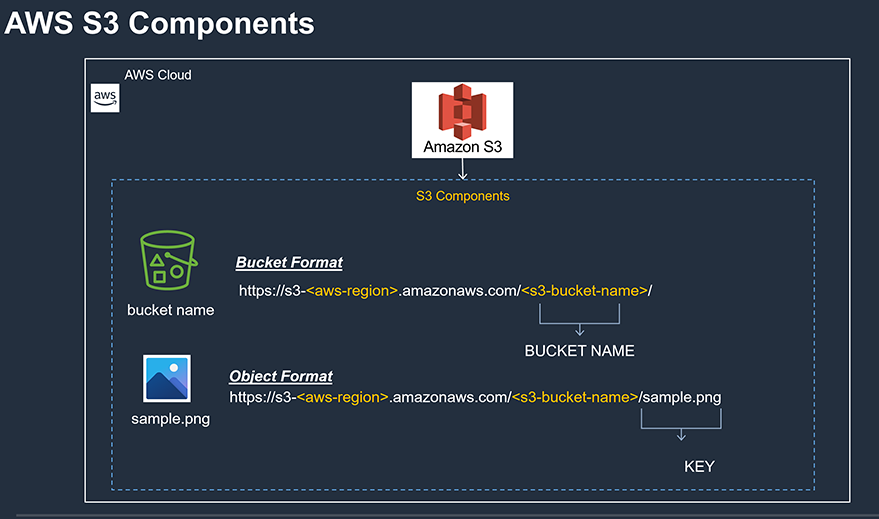

The basic components of an S3 bucket are

- Bucket name

- Objects /files in a bucket

- Key.

The above diagram should help you understand how the components are classified in an S3 bucket. The Key is a unique identifier mapped to each object in an S3. More details on S3 can be found on their official website.

3) Storage Solution With Python SDK

Next up we are going to get our back end code ready which takes the input object from the user through the flask form and loads it into the S3. First thing first let’s create the S3 bucket.

Install the python botot3 with python package manager i.e. pip

pip install boto3

1) Define S3 Client

We need to import boto3 into our code and also define a function to define the s3 client. As S3 is a global service and not region-specific we need not specify the region while defining the client.

import boto3

import json

import datetime

import sys

import botocore

from botocore.exceptions import ClientError

from pyboto3 import *

# ------------------------------------------------------------------------------------------------------------------------

def s3_client():

"""

Function: get s3 client

Purpose: get s3 client

:returns: s3

"""

session = boto3.session.Session()

client = session.client('s3')

""" :type : pyboto3.s3 """

return client

2) Create S3 Bucket

The following function will accept the bucket name as a parameter and uses the s3 client from the above function to create the bucket in your current region. If the region is not specified the bucket is created in the default “us-east” region. The bucket name must adhere to the below standards

- The bucket name must be 3 – 63 characters in length.

- Lower case, numbers, and hyphens are allowed to use.

- Do not use dot (.) and hyphen (-) characters in the bucket name.

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket(bucket_name):

"""

function: s3_create_bucket - create s3 bucket

:args: s3 bucket name

:returns: bucket

"""

# fetch the region

session = boto3.session.Session()

current_region = session.region_name

# get the client

client = s3_client()

print(f" *** You are in {current_region} AWS region..\n Bucket name passed is - {bucket_name}")

s3_bucket_create_response = client.create_bucket(Bucket=bucket_name,

CreateBucketConfiguration={

'LocationConstraint': current_region})

print(f" *** Response when creating bucket - {s3_bucket_create_response} ")

return s3_bucket_create_response3) Creating Bucket Policy

Now we have a bucket created, we will create a bucket policy to restrict who and from where the objects inside the buckets can be accessed. In short, bucket policy is the way to configure the access policies to your bucket like the IP Ranges, hosts, who, and what can be done to your bucket.

I will be using the JSON format (dictionary format) to specify the policy configuration.

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket_policy(s3_bucket_name):

"""

function: s3_create_bucket_policy - Apply bucket policy

:args: none

:returns: none

Notes: For test purpose let us allow all the actions, Need to change later.

"""

resource = f"arn:aws:s3:::{s3_bucket_name}/*"

s3_bucket_policy = {"Version": "2012-10-17",

"Statement": [

{

"Sid": "AddPerm",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": resource,

"Condition": {

"IpAddress": {"aws:SourceIp": ""}

}

}

]}

# prepare policy to be applied to AWS as Json

policy = json.dumps(s3_bucket_policy)

# apply policy

s3_bucket_policy_response = s3_client().put_bucket_policy(Bucket=s3_bucket_name,

Policy=policy)

# print response

print(f" ** Response when applying policy to {s3_bucket_name} is {s3_bucket_policy_response} ")

return s3_bucket_policy_response4) Versioning Objects In S3

AWS S3 provides the versioning of the objects in S3 and by default, it is not enabled. Each object has a unique version ID in buckets with version enabled.

The version id for objects will be set to Null for S3 buckets that are not version enabled.

# ------------------------------------------------------------------------------------------------------------------------

def s3_version_bucket_files(s3_bucket_name):

client = s3_client()

version_bucket_response = client.put_bucket_versioning(Bucket=s3_bucket_name,

VersioningConfiguration={'Status': 'Enabled'})

# check apply bucket response..

if version_bucket_response['ResponseMetadata']['HTTPStatusCode'] == 204:

print(f" *** Successfully applied Versioning to {s3_bucket_name}")

else:

print(f" *** Failed while applying Versioning to bucket")5) Validating Bucket and Bucket Policy

Now that we have the code for creating the bucket and the policy, we will execute the code and validate the bucket and its policy using the following code.

import boto3

import argparse

import json

import datetime

import sys

import botocore

import time

import click

from botocore.exceptions import ClientError

from pyboto3 import *

# ------------------------------------------------------------------------------------------------------------------------

def s3_client():

"""

Function: get s3 client

Purpose: get s3 client

:returns: s3

"""

session = boto3.session.Session()

client = session.client('s3')

""" :type : pyboto3.s3 """

return client

# ------------------------------------------------------------------------------------------------------------------------

def list_s3_buckets():

"""

Function: list_s3_buckets

Purpose: Get the list of s3 buckets

:returns: s3 buckets in your aws account

"""

client = s3_client()

buckets_response = client.list_buckets()

# check buckets list returned successfully

if buckets_response['ResponseMetadata']['HTTPStatusCode'] == 200:

for s3_buckets in buckets_response['Buckets']:

print(f" *** Bucket Name: {s3_buckets['Name']} - Created on {s3_buckets['CreationDate']} \n")

else:

print(f" *** Failed while trying to get buckets list from your account")

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket(bucket_name):

"""

function: s3_create_bucket - create s3 bucket

:args: s3 bucket name

:returns: bucket

"""

# fetch the region

session = boto3.session.Session()

current_region = session.region_name

# get the client

client = s3_client()

print(f" *** You are in {current_region} AWS region..\n Bucket name passed is - {bucket_name}")

s3_bucket_create_response = client.create_bucket(Bucket=bucket_name,

CreateBucketConfiguration={

'LocationConstraint': current_region})

print(f" *** Response when creating bucket - {s3_bucket_create_response} ")

return s3_bucket_create_response

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket_policy(s3_bucket_name):

"""

function: s3_create_bucket_policy - Apply bucket policy

:args: none

:returns: none

Notes: For test purpose let us allow all the actions, Need to change later.

"""

resource = f"arn:aws:s3:::{s3_bucket_name}/*"

s3_bucket_policy = {"Version": "2012-10-17",

"Statement": [

{

"Sid": "AddPerm",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": resource,

"Condition": {

"IpAddress": {"aws:SourceIp": ""}

}

}

]}

# prepare policy to be applied to AWS as Json

policy = json.dumps(s3_bucket_policy)

# apply policy

s3_bucket_policy_response = s3_client().put_bucket_policy(Bucket=s3_bucket_name,

Policy=policy)

# print response

print(f" ** Response when applying policy to {s3_bucket_name} is {s3_bucket_policy_response} ")

return s3_bucket_policy_response

# ------------------------------------------------------------------------------------------------------------------------

def s3_list_bucket_policy(s3_bucket_name):

"""

function: s3_list_bucket_policy - list the bucket policy

:args: none

:returns: none

"""

s3_list_bucket_policy_response = s3_client().get_bucket_policy(Bucket=s3_bucket_name)

print(s3_list_bucket_policy_response)

# ------------------------------------------------------------------------------------------------------------------------

def s3_version_bucket_files(s3_bucket_name):

client = s3_client()

version_bucket_response = client.put_bucket_versioning(Bucket=s3_bucket_name,

VersioningConfiguration={'Status': 'Enabled'})

# check apply bucket response..

if version_bucket_response['ResponseMetadata']['HTTPStatusCode'] == 204:

print(f" *** Successfully applied Versioning to {s3_bucket_name}")

else:

print(f" *** Failed while applying Versioning to bucket")

if __name__ == '__main__':

list_s3_buckets()

bucket_name = f"flask-small-file-uploads"

s3_bucket = s3_create_bucket(bucket_name)

s3_apply_bucket_policy = s3_create_bucket_policy(bucket_name)

s3_show_bucket_policy = s3_list_bucket_policy(bucket_name)

s3_show_bucket_response = s3_version_bucket_files(bucket_name)

list_s3_buckets()4) Uploading Small Files To S3 With Python SDK

Amazon S3 provides a couple of ways to upload the files, depending on the size of the file user can choose to upload a small file using the “put_object” method or use the multipart upload method. I will upload a separate tutorial on how to upload huge files to S3 with Flask. In this tutorial, we will focus on uploading small files.

From the S3 API specification, to upload a file we need to pass the full file path, bucket name, and KEY. The KEY as you can remember from the introduction section identifies the location path of your file in an S3 bucket. As S3 works as a key-value pair, it is mandatory to pass the KEY to the upload_file method.

The following two methods will show you how to upload a small file to S3 followed by listing all the files in a bucket.

# ------------------------------------------------------------------------------------------------------------------------

def s3_upload_small_files(inp_file_name, s3_bucket_name, inp_file_key, content_type):

client = s3_client()

upload_file_response = client.put_object(Body=inp_file_name,

Bucket=s3_bucket_name,

Key=inp_file_key,

ContentType=content_type)

print(f" ** Response - {upload_file_response}")5. Integration With Flask Web Application

Let us set up the app like below and then we will go into details.

~/Flask-Upload-Small-Files-S3

|-- app.py

|__ /views

|-- s3.py

|__ /templates

|__ /includes

|-- _flashmsg.html

|-- _formhelpers.html

|__ main.htmlTime to discuss the components in detail before we execute the code We will first discuss the design steps that are being implemented. The design is pretty simple and I will do my best to explain it in that way.

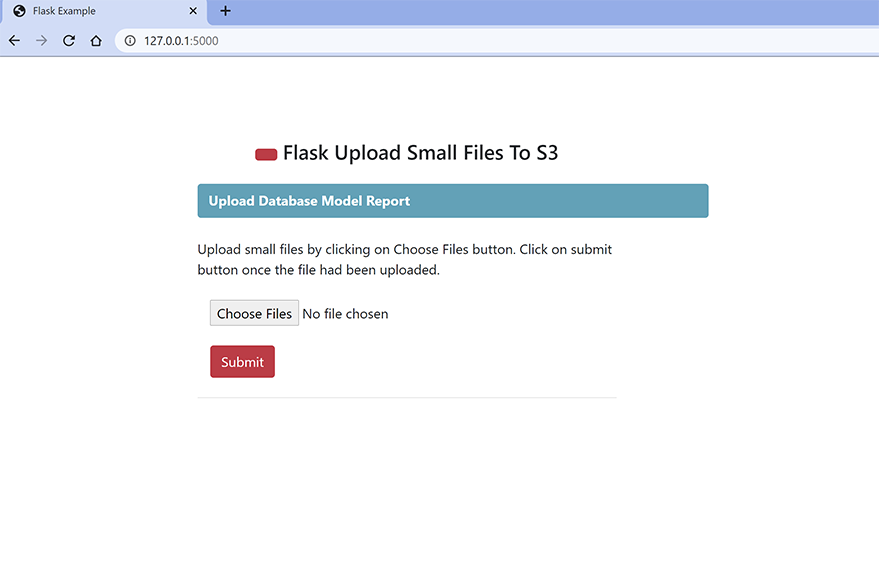

5a) Components: Templates/main,html

I will be using a single template (main.html) simple enough for this demonstration purpose.

This is the home page for the user to upload a file to S3. The HTML template is quite simple with just the upload file option and submits button.

The template is embedded with flask messages while will be passed by the application code based on the validation results. If the user submits the button without choosing a file or uploads a file that is not in the allowed extensions the error message appears on the main page else a success message appears.

<html lang="en">

<head>

<!-- Required meta tags -->

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1, shrink-to-fit=no">

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0/css/bootstrap.min.css"

integrity="sha384-Gn5384xqQ1aoWXA+058RXPxPg6fy4IWvTNh0E263XmFcJlSAwiGgFAW/dAiS6JXm" crossorigin="anonymous">

<title>Flask Example</title>

</head>

<br>

<body class="bg-gradient-white">

<div id="page-wrapper">

<div class="container">

{% include 'includes/_flashmsg.html' %}

{% from "includes/_formhelpers.html" import render_field %}

<div class="row justify-content-center">

<div class="col-xl-10 col-xl-12 col-xl-9">

<div class="card o-hidden border-0 shadow-lg my-5">

<div class="card-body p-0">

<div class="row">

<div class="col-lg-6">

<div class="p-4">

<div class="text-center">

<h1 class="h4 text-gray-900 mb-4">

<button type="button" class="btn btn-danger btn-circle-sm"><i

class="fa fa-mask"></i></button>

Flask Upload Small Files To S3

</h1>

</div>

<a href="#" class="btn btn-info btn-icon-split text-left"

style="height:40px; width:600px ;margin: auto ;display:block">

<span class="icon text-white-50">

<i class="fas fa-upload text-white"></i>

</span>

<span class="mb-0 font-weight-bold text-800 text-white">Upload Database Model Report</span>

</a>

<br>

<p class="mb-4"> Upload small files by clicking on Choose Files button.

Click on submit button once the file had been uploaded.</p>

<div class="container">

<form method="POST" action="/upload_files_to_s3"

enctype="multipart/form-data">

<dl>

<p>

<input type="file" name="file" multiple="true" autocomplete="off"

required>

</p>

</dl>

<div id="content">

<div class="form-group row">

<div class="col-sm-4 col-form-label">

<input type="submit" class="btn btn-danger">

</div>

</div>

</div>

</form>

<p>

{% with messages = get_flashed_messages(with_categories=true) %}

{% if messages %}

{% for category, message in messages %}

<div class="alert alert-{{ category }} col-lg-8" role="alert"> {{ message }}

</div>

{% endfor %}

{% endif %}

{% endwith %}

</div>

<hr>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

<script src="https://code.jquery.com/jquery-3.2.1.slim.min.js"

integrity="sha384-KJ3o2DKtIkvYIK3UENzmM7KCkRr/rE9/Qpg6aAZGJwFDMVNA/GpGFF93hXpG5KkN"

crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/popper.js/1.12.9/umd/popper.min.js"

integrity="sha384-ApNbgh9B+Y1QKtv3Rn7W3mgPxhU9K/ScQsAP7hUibX39j7fakFPskvXusvfa0b4Q"

crossorigin="anonymous"></script>

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0/js/bootstrap.min.js"

integrity="sha384-JZR6Spejh4U02d8jOt6vLEHfe/JQGiRRSQQxSfFWpi1MquVdAyjUar5+76PVCmYl"

crossorigin="anonymous"></script>

</body>

</html>5b) Components: templates/includes/_flashmsg.html

{% with messages = get_flashed_messages(with_categories=true) %}

<!-- Categories: success (green), info (blue), warning (yellow), danger (red) -->

{% if messages %}

{% for category, message in messages %}

<div class="alert alert-{{ category }} alert-dismissible my-4" role="alert">

{{ message }}

</div>

{% endfor %}

{% endif %}

{% endwith %}5c) Components: templates/includes/_formhelpers.html

{% macro render_field(field) %}

<dt>{{ field.label }}</dt>

{{ field(**kwargs)|safe }}

{% if field.errors %}

{% for error in field.errors %}

<span class="help-inline">{{ error }}</span>

{% endfor %}

{% endif %}

{% endmacro %}5d) Components: views/s3.py

The details inside s3.py are pretty much the same we discussed in the above section. I will show the code once again here.

import boto3

import argparse

import json

import datetime

import sys

import botocore

import time

import click

# from RDS.Create_Client import RDSClient

from botocore.exceptions import ClientError

from pyboto3 import *

# ------------------------------------------------------------------------------------------------------------------------

def s3_client():

"""

Function: get s3 client

Purpose: get s3 client

:returns: s3

"""

session = boto3.session.Session()

client = session.client('s3')

""" :type : pyboto3.s3 """

return client

# ------------------------------------------------------------------------------------------------------------------------

def list_s3_buckets():

"""

Function: list_s3_buckets

Purpose: Get the list of s3 buckets

:returns: s3 buckets in your aws account

"""

client = s3_client()

buckets_response = client.list_buckets()

# check buckets list returned successfully

if buckets_response['ResponseMetadata']['HTTPStatusCode'] == 200:

for s3_buckets in buckets_response['Buckets']:

print(f" *** Bucket Name: {s3_buckets['Name']} - Created on {s3_buckets['CreationDate']} \n")

else:

print(f" *** Failed while trying to get buckets list from your account")

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket(bucket_name):

"""

function: s3_create_bucket - create s3 bucket

:args: s3 bucket name

:returns: bucket

"""

# fetch the region

session = boto3.session.Session()

current_region = session.region_name

print(f" *** You are in {current_region} AWS region..\n Bucket name passed is - {bucket_name}")

s3_bucket_create_response = s3_client().create_bucket(Bucket=bucket_name,

CreateBucketConfiguration={

'LocationConstraint': current_region})

print(f" *** Response when creating bucket - {s3_bucket_create_response} ")

return s3_bucket_create_response

# ------------------------------------------------------------------------------------------------------------------------

def s3_create_bucket_policy(s3_bucket_name):

"""

function: s3_create_bucket_policy - Apply bucket policy

:args: none

:returns: none

Notes: For test purpose let us allow all the actions, Need to change later.

"""

resource = f"arn:aws:s3:::{s3_bucket_name}/*"

s3_bucket_policy = {"Version": "2012-10-17",

"Statement": [

{

"Sid": "AddPerm",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": resource,

"Condition": {

"IpAddress": {"aws:SourceIp": ""}

}

}

]}

# prepare policy to be applied to AWS as Json

policy = json.dumps(s3_bucket_policy)

# apply policy

s3_bucket_policy_response = s3_client().put_bucket_policy(Bucket=s3_bucket_name,

Policy=policy)

# print response

print(f" ** Response when applying policy to {s3_bucket_name} is {s3_bucket_policy_response} ")

return s3_bucket_policy_response

# ------------------------------------------------------------------------------------------------------------------------

def s3_list_bucket_policy(s3_bucket_name):

"""

function: s3_list_bucket_policy - list the bucket policy

:args: none

:returns: none

"""

s3_list_bucket_policy_response = s3_client().get_bucket_policy(Bucket=s3_bucket_name)

print(s3_list_bucket_policy_response)

# for s3_bucket_policy in s3_list_bucket_policy_response['Policy']:

# print(f" *** Bucket Policy Version: {s3_bucket_policy['Version']} \n - Policy {s3_bucket_policy['Statement']} ")

# ------------------------------------------------------------------------------------------------------------------------

def s3_delete_bucket(s3_bucket_name):

client = s3_client()

delete_buckets_response = client.delete_bucket(Bucket=s3_bucket_name)

# check delete bucket returned successfully

if delete_buckets_response['ResponseMetadata']['HTTPStatusCode'] == 204:

print(f" *** Successfully deleted bucket {s3_bucket_name}")

else:

print(f" *** Delete bucket failed")

# ------------------------------------------------------------------------------------------------------------------------

def s3_version_bucket_files(s3_bucket_name):

client = s3_client()

version_bucket_response = client.put_bucket_versioning(Bucket=s3_bucket_name,

VersioningConfiguration={'Status': 'Enabled'})

# check apply bucket response..

if version_bucket_response['ResponseMetadata']['HTTPStatusCode'] == 204:

print(f" *** Successfully applied Versioning to {s3_bucket_name}")

else:

print(f" *** Failed while applying Versioning to bucket")

# ------------------------------------------------------------------------------------------------------------------------

def s3_upload_small_files(inp_file_name, s3_bucket_name, inp_file_key, content_type):

client = s3_client()

upload_file_response = client.put_object(Body=inp_file_name,

Bucket=s3_bucket_name,

Key=inp_file_key,

ContentType=content_type)

print(f" ** Response - {upload_file_response}")

# ------------------------------------------------------------------------------------------------------------------------

def s3_read_objects(s3_bucket_name, inp_file_key):

client = s3_client()

read_object_response = client.put_object(Bucket=s3_bucket_name,

Key=inp_file_key)

print(f" ** Response - {read_object_response}")5e) Components: app.py

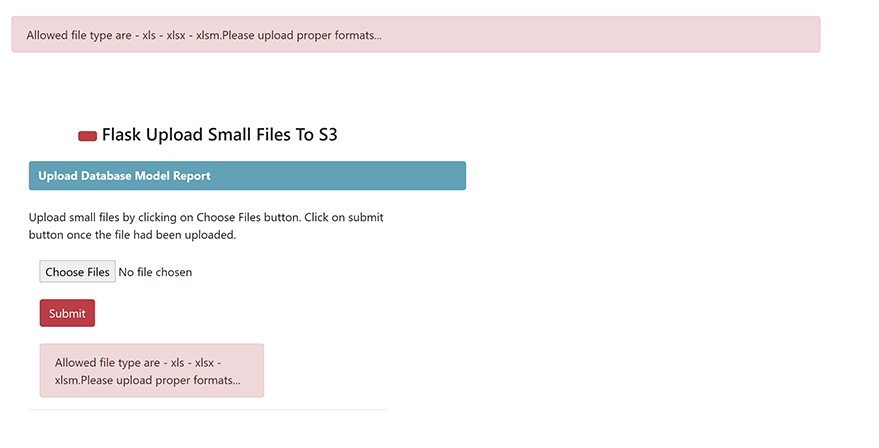

The app.py is the main orchestrator of our program. We first set the allowed file extensions to Excel spreadsheets using the ALLOWED_EXTENSIONS variable. The function index or the app route ‘/’ just displays the main.html page.

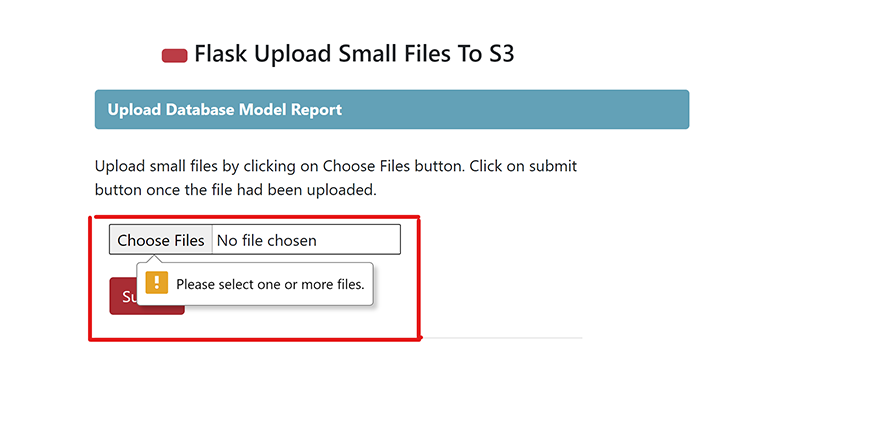

The function upload_files_to_s3 will be triggered when the user clicks on the submit button on the main.html page and validates the following scenarios:

- If the file to upload is empty (i.e. missing) or the file extension is not in the allowed extensions variable the function throws the error message.

- If the file to upload is in the allowed extensions then the file will be uploaded to S3 using the s3_upload_small_files function in views/s3.py

import os

from flask import Flask, render_template, session, redirect, url_for, request, flash

from flask_bootstrap import Bootstrap

from flask_wtf.csrf import CSRFProtect

from werkzeug.utils import secure_filename

from flask_mail import Mail

from views.s3 import *

app = Flask(__name__)

bootstrap = Bootstrap(app)

app.config.from_object('settings')

app.secret_key = os.urandom(24)

app.config['MAX_CONTENT_LENGTH'] = 16 * 1024 * 1024

"""

# -- ---------------------------------------------------------------------------------

# -- Set allowed extensions to allow only upload excel files

# -- ---------------------------------------------------------------------------------

"""

ALLOWED_EXTENSIONS = set(['xls', 'xlsx', 'xlsm'])

def allowed_file(filename):

return '.' in filename and filename.rsplit('.', 1)[1].lower() in ALLOWED_EXTENSIONS

# -----------------------------------------------------------------------------------------

@app.route('/', methods=['GET', 'POST'])

def index():

if request.method in ('POST'):

print(f"*** Inside the template")

return render_template('main.html')

# ------------------------------------------------------------------------------------------

@app.route('/upload_files_to_s3', methods=['GET', 'POST'])

def upload_files_to_s3():

if request.method == 'POST':

# No file selected

if 'file' not in request.files:

flash(f' *** No files Selected', 'danger')

file_to_upload = request.files['file']

content_type = request.mimetype

# if empty files

if file_to_upload.filename == '':

flash(f' *** No files Selected', 'danger')

# file uploaded and check

if file_to_upload and allowed_file(file_to_upload.filename):

file_name = secure_filename(file_to_upload.filename)

print(f" *** The file name to upload is {file_name}")

print(f" *** The file full path is {file_to_upload}")

bucket_name = "flask-small-file-uploads"

s3_upload_small_files(file_to_upload, bucket_name, file_name,content_type )

flash(f'Success - {file_to_upload} Is uploaded to {bucket_name}', 'success')

else:

flash(f'Allowed file type are - xls - xlsx - xlsm.Please upload proper formats...', 'danger')

return redirect(url_for('index'))

if __name__ == '__main__':

app.run(debug=True)

This pretty much concludes the programming part.

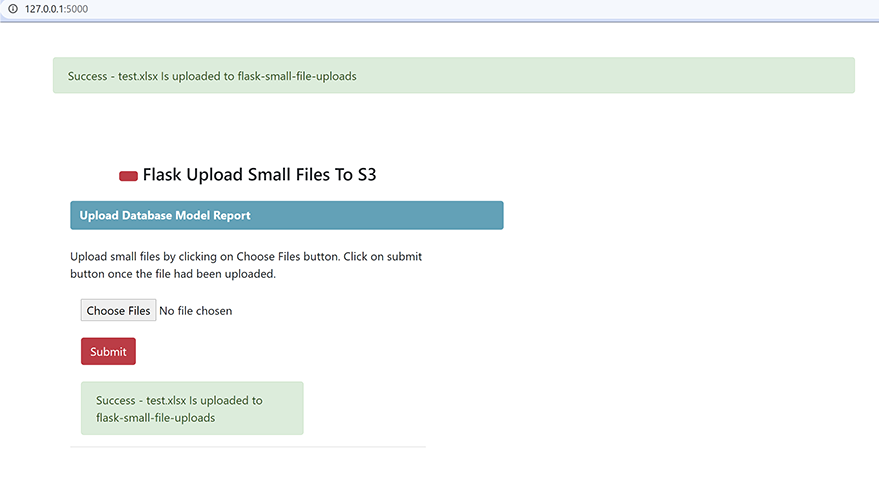

6. Final Step – Executing The Code

Home Page:

SCENARIO 1:

No File selected: When the user clicks the submit button without selecting any file.

SCENARIO 2:

Wrong File Extension: When the user tries to upload a file that is not set in the extension.

SCENARIO 3:

File Upload: When the user tries to upload the right file extension.

You can validate the file details by running the function – s3_read_objects in views/s3.py

If you liked this article and if it helped you in any way, feel free to like it and subscribe to this website for more tutorials.

If you believe this article will be of big help to someone, feel free to share.