An Introduction to Clustering in Node.js

Picture your Node.js app starts to slow down as it gets bombarded with user requests. It's like a traffic jam for your app, and no developer likes dealing with that.

Picture your Node.js app starts to slow down as it gets bombarded with user requests. It's like a traffic jam for your app, and no developer likes dealing with that.

The Jamstack architecture, a term coined by Mathias Biilmann, the co-founder of Netlify, encompasses a set of structural practices that rely on client-side JavaScript, reusable APIs, and prebuilt markup to build websites and applications. At its core, the Jamstack advocates for rendering web applications into static HTML files during the build process and serving them efficiently to clients.

Next.js is popular for its seamless support of static site generation (SSG) and server-side rendering (SSR), which offers developers the flexibility to choose the most appropriate rendering method for each application page. Traditionally, Next.js utilized functions like getStaticProps, getStaticPaths, and getServerSideProps to manage how pages are rendered. Depending on the data requirements, these functions determine whether a page should be generated at build time or on each server request.

Vue.js and Nuxt.js seamlessly complement each other for SSR. Vue.js provides the core functionality for dynamic UIs, while Nuxt.js enables server-side rendering of Vue.js components, ensuring fast initial page loads and optimal SEO. Nuxt.js simplifies SSR application development, allowing developers to focus on crafting exceptional user experiences without getting bogged down by SSR intricacies.

Central to the development of Node native modules is Node-API, a groundbreaking toolkit introduced since Node.js 8.0.0. Node-API serves as an intermediary between C/C++ code and the Node JavaScript engine, facilitating a smooth interaction and manipulation of JavaScript objects from native code. Node-API also abstracts its API from the underlying JavaScript engine, ensuring backward compatibility and stability across Node.js versions.

A well-optimized Node.js application not only ensures a smoother user experience but also scales effectively to meet demand.

By combining Nest.js and AWS Lambda, developers can harness the benefits of serverless computing to build flexible, resilient, and highly scalable microservices.

Clerk.dev is more than just an authentication tool; it's a comprehensive platform designed to simplify and enhance user management and security in web applications. With a focus on developer experience and application flexibility, Clerk.dev emerges as a robust alternative to more traditional solutions like Auth0. Let's explore its standout features and advantages.

Utilizing React Query for data fetching is simple and effective. By removing the complexity of caching, background updates, and error handling, React Query streamlines and improves data retrieval.

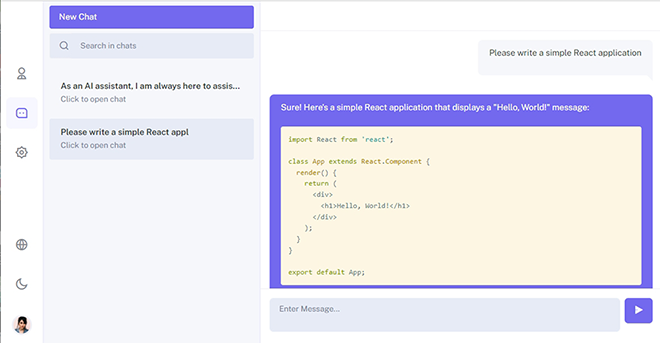

In June, OpenAI announced that third-party applications' APIs can be passed into the GPT model, opening up a wide range of possibilities for creating specialized agents. Our team decided to write our own chat for working with GPT4 from OpenAI and other ML/LLM models with the ability to customize for the company's internal needs.